A codebase that constantly evolves can suffer from bugs that neither unit or integration tests can catch. At PayFit, we quickly invested in end-to-end tests to catch critical bugs before they reach production. As our engineering team grew and our architecture evolved from monoliths to micro-services, we faced multiple challenges to scale our end-to-end tests.

Although they might be costly to maintain and slow to run, end-to-end tests bring a strong level of confidence to engineers when releasing features, especially ones related to complex user journeys spreading across services owned by multiple teams.

Deploy an environment to run tests

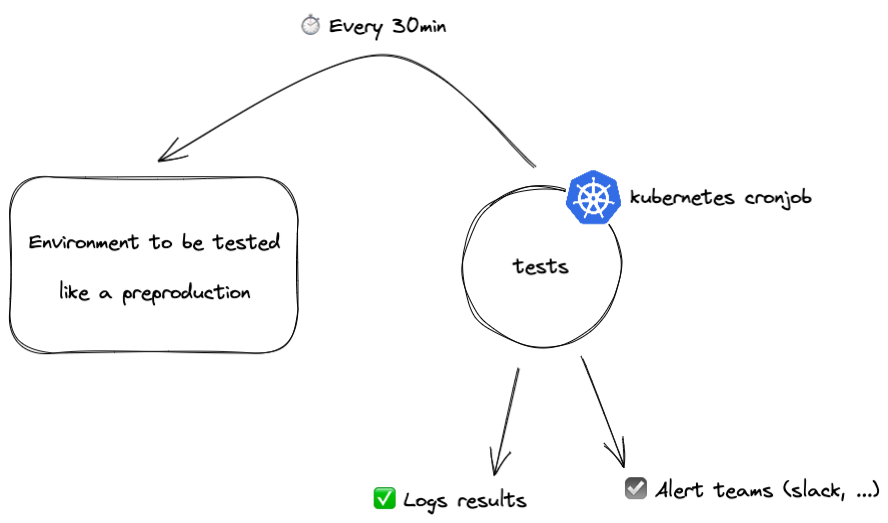

An environment deployed with the code to test is a simple but efficient way to run end-to-end tests.

If you want your tests to run on every commit of the continuous integration, then you'll have to generate new environments on demand. As the number of engineers increase, it became too slow and costly for us to do so. We decided at PayFit to only run tests periodically against an environment dedicated for tests. It is an environment where the new code is deployed before going to production as soon as the end-to-end tests pass. In our infrastructure, a Kubernetes cronjob is a perfect way to execute tests periodically.

Choose a test framework: Cypress vs TestCafe

There are some excellent resources on the web to find an appropriate test framework. If you don't know where to start, I would recommend to go for Cypress or TestCafe if you want to write your tests in JavaScript.

We currently use the TestCafe framework to run our end-to-end tests. The main difference between TestCafe and Cypress is that TestCafe runs in NodeJS while Cypress runs in the browser. Running in NodeJS allows to connect to a database to insert fixtures, while running in the browser requires you to write a server to insert fixtures in the database, and call that server for test setup and teardown.

Write tests according to industry best practices: the "Page object" pattern

Now that you have chosen a test framework, you will soon write your first tests and run them on your computer.

Aim for the Page object pattern right from the start to organize your tests. It is a pattern which aims to keep tests maintainable even though the codebase evolves, especially the CSS.

Cypress has created a comprehensive documentation on end-to-end best practices, don't hesitate to take a look!

Running tests in Docker

Running test on your computer is often easy and well documented, however running it in Docker is a bit trickier.

In Docker, tests are run without an UI shell in what is called the *headless* mode (see Headless Chrome) and you need a Docker image with both your test framework and Headless Chrome. TestCafe provides a Docker image but that image is not convenient if you use the JavaScript API to start your tests.

Cypress provides Docker images with both Chrome and NodeJS that you can use to make your own Dockerfile. We even use the Cypress docker image with the TestCafe framework!

Running tests in Kubernetes: a trick to actually make it work

If running TestCafe in Docker is tricky, running TestCafe as a Kubernetes cronjob is even trickier, we learned it the hard way.

TestCafe tests should run in Docker without any issue, but if you run the Docker image as a Kubernetes job, it will be bound to randomly fail in the middle of your tests with the error browser disconnected depending on your kubernetes version.

It is because Kubernetes is deprecating Docker as a container runtime after v1.20. Docker images will still work the same (see containerd vs Docker), it is just not Docker but containerd. The issue is that TestCafe relies on the confusing NPM package is-docker which says "you are not in docker" when running a container in a Kubernetes version based on containerd.

The trick is to make sure that the arguments --no-sandbox and --disable-dev-shm-usage are passed to the Chrome browser when running tests in a container. In Testcafe, you have to enforce them yourself for now. Now running a cronjob which starts the tests periodically should work like a charm!

Keep tests close to the code

As the number of teams grows, end-to-end tests should stay close to the business logic. The natural place for them is to be in the same repository as the domain code. We started with centralized tests in one shared repository and now we are moving away towards tests distributed in multiple repositories.

If like us you have organized your code in different mono-repos per domain, then the tests of a domain would be in the same repository as the rest of the domain logic. It implies that you may have to package some shared logic to use it among the different group of tests. For example, if you built your own test reporter in javascript, you could publish it as an npm package to share it across repositories.

The complexity of running end-to-end tests in a distributed environment

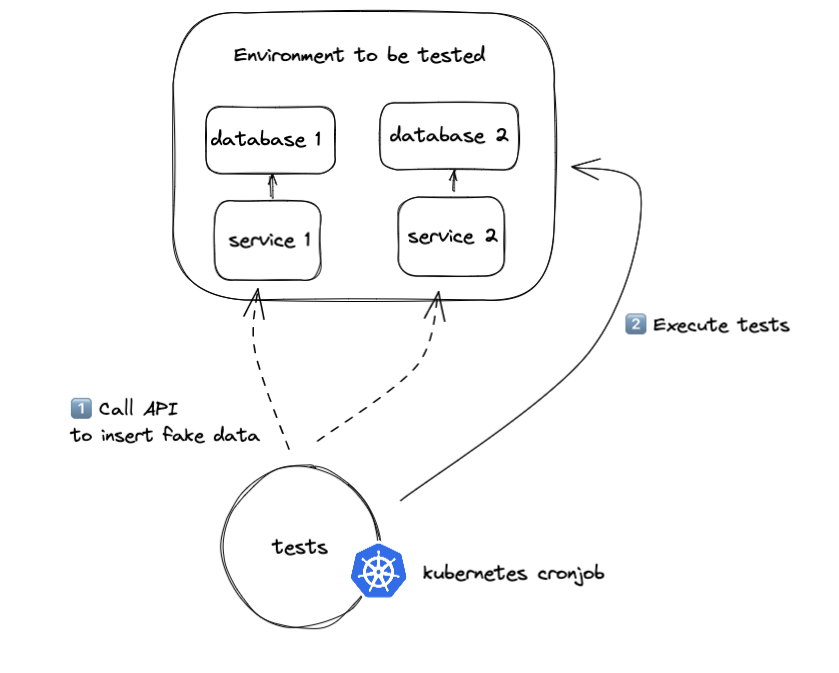

At PayFit we aim for database isolation and ownership per domain, so we don't want end-to-end tests to connect directly to all databases.

If your product requires a core entity "customer" to work, then all end-to-end tests will have to be able to create and delete customers. It is an issue because only the "customer API" should be allowed to write in its database, not the end-to-end tests related to another domain.

You can solve that by using API calls to the different services wrapping the databases to initialise and delete fixtures. It requires some effort to build the APIs but keeps the databases isolated, instead of connecting to the different databases directly in every tests.

What experience with end-to-end tests in production taught us

End-to-end tests often randomly fail because of a various reasons, such as network bandwidth, CPU throttling, or race conditions in the first iterations.

Based on our experience, we have come up with a list of best practices to ensure that tests are effective to prevent regressions:

-

1️⃣ Define clear ownership and accountability

- A team who owns a product experience should write the tests for that experience since they have the business knowledge of how it should behave.

- If a test fails, the team owning the test should be notified and react accordingly without impacting other teams. Don't hesitate to build your test reporter to notify instantly the right engineers when a test fails.

-

2️⃣ Continuously improve stability

- Design your tests to be retry-able and automatically retry a test that fails (see quarantine mode in TestCafe).

- Make sure that a test can run twice in parallel on the same environment, it will make debugging way easier. You may have to design fixtures to have some randomness to avoid referencing the same data twice.

- If a test random fails, either delete it or fix it right away. Otherwise teams may lose confidence in the stability and usefulness of end-to-end tests.

-

3️⃣ Keep a small number of high-value tests

- Start with end-to-end tests that would have prevented high impact regressions you experienced recently.

- Ask the business what are the most critical user journeys for them, and test them.

- When there are many paths in a user journey, test only the most critical ones.

-

4️⃣ End-to-end tests are highly valuable in case of cross-team migration, promote it internally

- In case of large infrastructure or database updates, remind teams to check end-to-end tests to see if they broke something. It will bring an additional level of confidence to teams that might break code they don't own.

Conclusion

Adding end-to-end tests can be a game changer to avoid regressions and speed up the development cycle. It is an investment at the beginning, but once it runs smoothly in an automated way, it is a precious tool for quality. As the number of services grows, it is critical to keep tests reliable and to have teams fully owning their tests.

Header photo by ThisisEngineering RAEng on Unsplash